Don't Just Chat.

Speak UI.

A2UI is the open standard protocol connecting

Large Language Models (LLMs) to your Frontend.

Stop generating fragile HTML. Start building safe, native, and

interactive Generative UI experiences.

The "Handshake" for Generative UI

A2UI (Agent-to-User Interface) isn't a library

component—it's a

protocol. It solves the biggest problem in AI

today:

How do intelligent agents safely control visual

interfaces?

Instead of writing dangerous HTML, A2UI agents negotiate intent

via structured JSON. Your app says "I can render a Chart,"

and the Agent says "Here is the data."

JSON, Not Code

Safe by design. The Agent sends a schema, your Codebase renders the view. No injections.

Native Performance

Zero visual lag. Renders as native React/Vue/Flutter components, indistinguishable from handwritten code.

Full Control

You define the design system. The Agent just uses the "Lego bricks" you provide.

Powering Next-Gen

Agentic Experiences

Travel Agents

Don't just chat about flights. Show real-time availability, seat maps, and booking flows directly in the conversation.

Conversational Commerce

Turn browsing into buying. Display product carousels, size guides, and checkout buttons without leaving the chat.

Data Analyst

Visualise SQL queries instantly. Render interactive line charts, pivot tables, and heatmaps from raw data.

How A2UI Works

Define

Create a component registry in your codebase mapping JSON types to React/Vue components.

Prompt

Instruct your LLM (GPT-4, Gemini) to output JSON matching your schema.

Render

The `<AIOutput />` component parses the stream and renders native UI instantly.

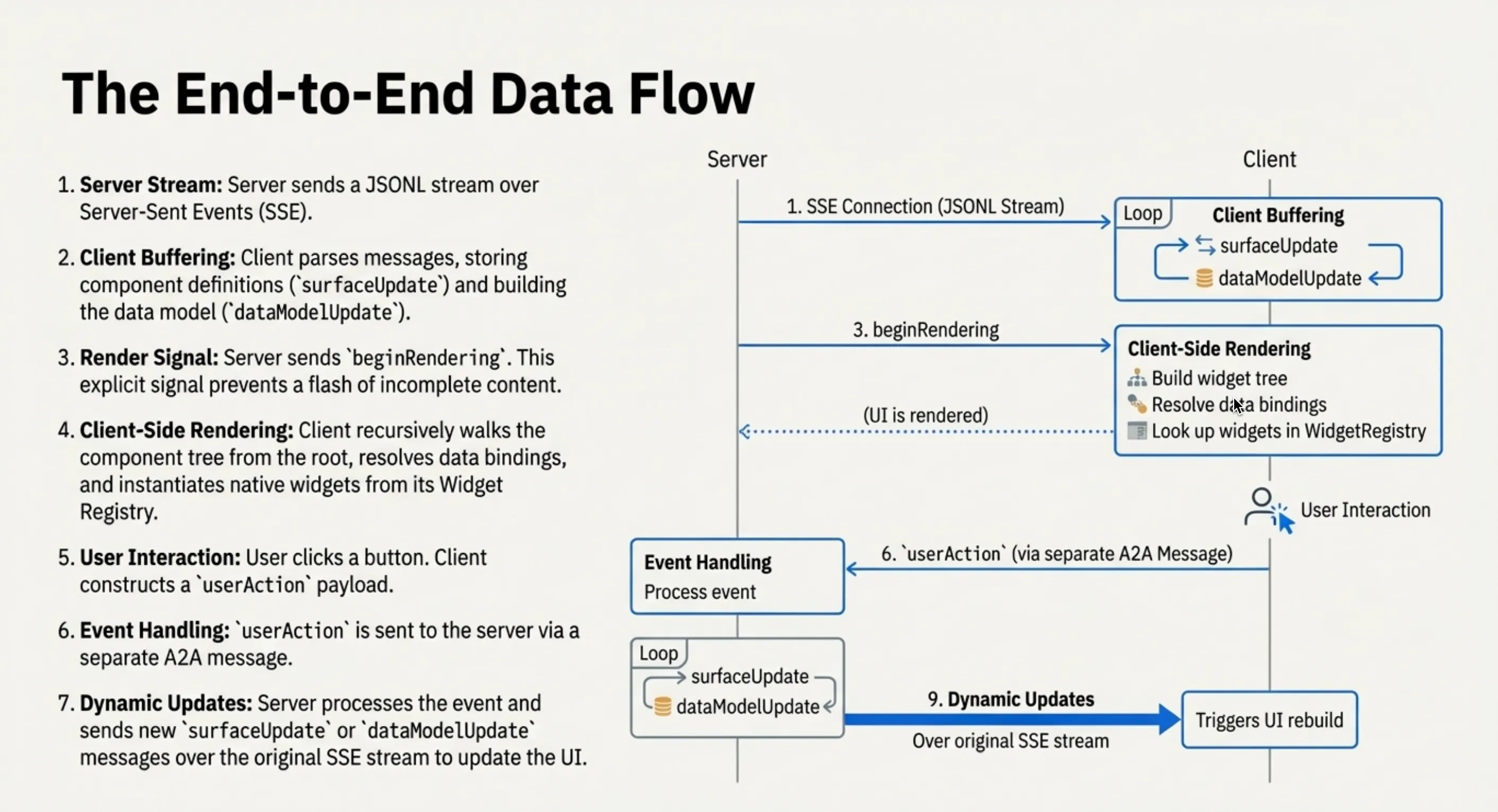

Protocol Flow

Agent generated UIs crossing trust boundaries safely using declarative schemas.

How Generative UI Actually Works

Unlike traditional "Chat UI" where the LLM just streams markdown text, A2UI Protocol establishes a two-way, type-safe communication channel between your Artificial Intelligence agents and your client-side application.

1. The Negotiation Phase

When a user session starts, the Client Application

(React, Vue, Flutter, etc.) sends a system_prompt containing the Component Schema. This

tells the LLM exactly which UI elements are available

(e.g., WeatherCard, StockChart, BookingForm) and their prop definitions.

2. The Generation Phase

Instead of hallucinating HTML tags (which is a security risk), the Agent generates Structured JSON adhering strictly to your schema. This ensures that the AI can only "speak" in valid UI components that you have explicitly allowed.

3. The Rendering Phase

Your frontend application receives this JSON stream. A dedicated A2UI Renderer mapped to your design system (Tailwind, Material UI, Shadcn) instantly hydrates these JSON nodes into real, interactive DOM elements. The result is a Native User Interface that feels indistinguishable from handwritten code.

Generic HTML vs. A2UI Protocol

Why this matters for SEO?

Implementing A2UI makes your AI application accessible, indexable, and significantly faster—improving your Core Web Vitals and search rankings compared to iframe-based solutions.

Why Developers Choose A2UI

The standard interface for building production-grade Agentic Applications. Stop fighting with prompt engineering for HTML—start using a deterministic protocol.

Type-Safe Protocol

End-to-end type safety between your Agent's thoughts and your UI. If the LLM hallucinates an invalid prop, A2UI catches it before render.

Design System Native

Don't settle for generic AI styles. A2UI uses your existing Tailwind, Shadcn, or Material UI components so everything looks on-brand.

Streaming Support

Render UI components incrementally as the LLM generates them. Reduce perceived latency and keep users engaged with real-time feedback.

Cross-Platform

One agent thought, everywhere. Render the same JSON response on Web (React/Vue), Mobile (React Native/Flutter), and Desktop.

Model Agnostic

Works with OpenAI GPT-4, Anthropic Claude 3.5, Google Gemini, Meta Llama 3, and any model capable of structured JSON output.

Framework Agnostic

Astro, Next.js, Remix, Nuxt, SvelteKit... A2UI is just a protocol standard. Implementation adapters exist for all modern stacks.

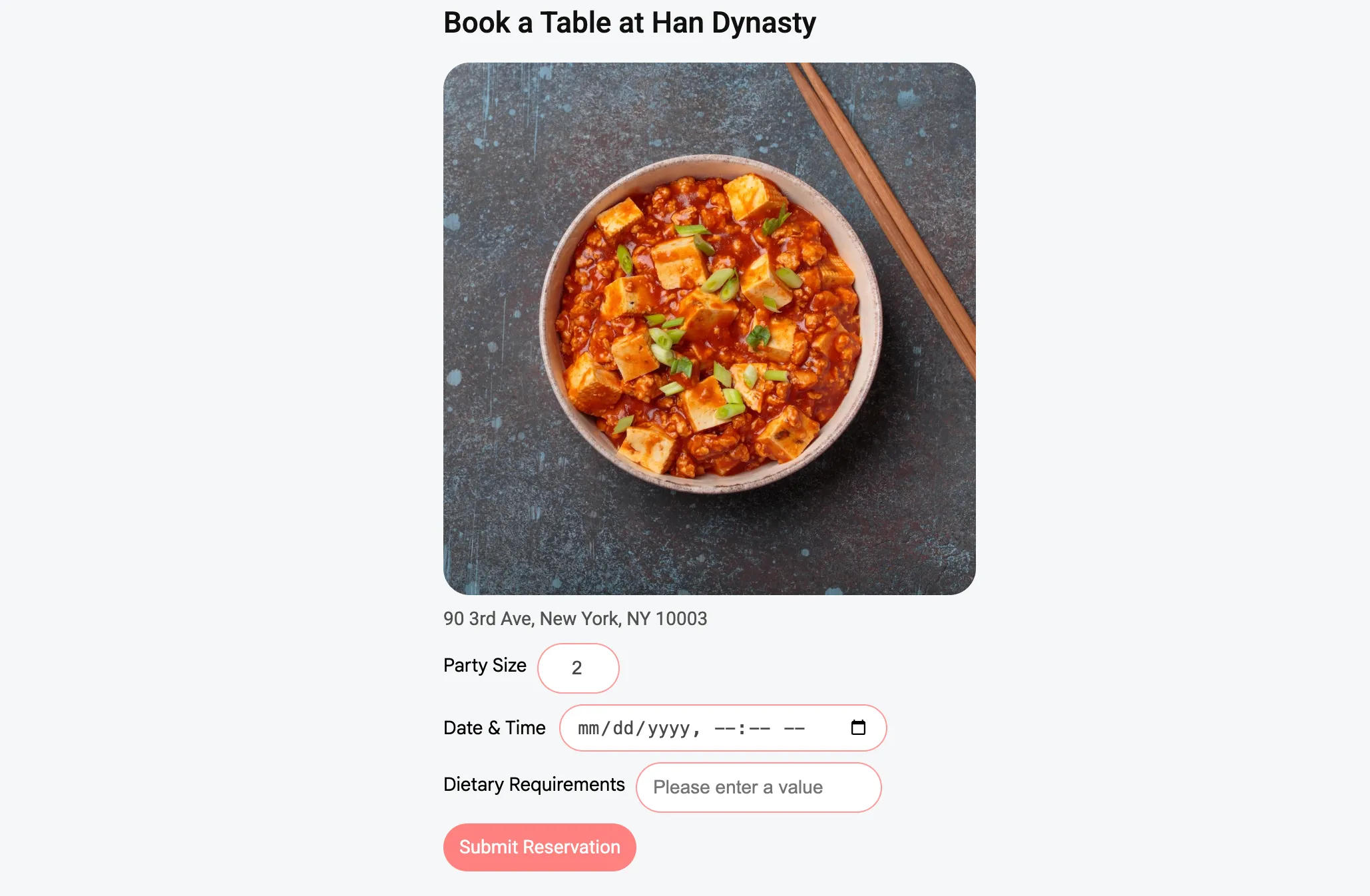

The Problem: Agents need to speak UI

Text-only interactions are slow and inefficient for complex tasks. Instead of a clunky back-and-forth about availability, A2UI allows agents to instantly render a bespoke booking interface.

A2UI in Action

Watch an agent generate a complete landscape architect application interface from a single photo upload.

Trusted by Industry Leaders

A2UI was a great fit for Flutter's GenUI SDK because it ensures that every user, on every platform, gets a high quality native feeling experience.

It gives us the flexibility to let the AI drive the user experience in novel ways... Its declarative nature and focus on security allow us to experiment quickly and safely.

A2UI changes that with a 'native-first' approach: Agents send a description of UI components, not code. Your app maps these to its own trusted design system, maintaining perfect brand consistency and security.